| g e n u i n e i d e a s | ||||||

|

|

|

|

|

|

|

| home | art and science |

writings | biography | food | inventions | search |

| with a little help from your friends |

|

Sept 2014 Originally published by Scientific American:

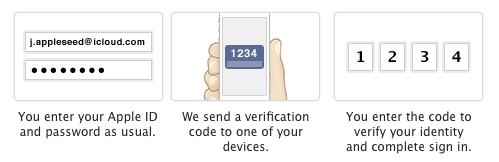

Guest Blog Today, maintaining privacy without guided assistance is an onerous task, whose initial costs are high, immediate rewards low and solutions fragile and constantly evolving. The moment after perfectly balancing your Facebook privacy settings, a new “feature” is introduced and suddenly potential employers can view your bachelor party photos. While you admit you should password protect your smartphone, half of us don’t bother in order to avoid the “enormous” burden of typing in a PIN. Leaving our private text messages and bank accounts wide open. Frankly, most people just give up, and hope the anonymity of the crowd, their own boring lives, and wishful thinking is sufficient protection. But they are mistaken. There are already good solutions today, but better systems will still be needed. How can we bring a semblance of order and control back to the individual in the face of an increasingly complex and “transparent” world? Not without help. And that help will come in the form of an automated, personal privacy adviser. While at Bell Labs in the 1990s, my colleagues and I tried to make privacy simpler by adapting to human strengths and foibles. One approach was to eliminate numeric passwords in favor of clicking on spots in an image chosen by the user. It turns out our visual memory is much stronger than our capacity to recall a random string of numbers. Today, Microsoft offers a related Picture Password interface in Windows 8. We also helped users by eliminating the burden of memorizing constantly changing passwords or possessing the discipline to scan through credit card bills for fraudulent charges. We did this by inventing an early form of two-factor authentication. One factor is your memorized password or credit card number. The other factor is a supplemental one-time key sent to your phone immediately after entering the initial password. Both factors are required for authorization. If someone stole your password from a yellow Post-It note (or by hacking the corporate server), they would also have to steal your phone to gain access to the second factor before logging in. Blunting the value of a massive, remote on-line password attack. We also envisioned credit card transactions that would no longer be authorized by signing your name, but with a text message seeking real-time approval. If someone “skimmed” your ATM card, they would also have to steal your phone to complete the transaction.

Credit card security? (Credit: EP Technology via Flickr) Given this perspective, what trends might lead to more secure, easier to implement privacy solution that we can manage in an intricate, and frankly hostile world? At Bell Labs, our research was informed by a group of scenarios projecting future capabilities and threats. The visual password came from our knowledge about the growing dominance of graphical interfaces like Windows. In the late 80s we projected a complete shift away from wired telephony, and predicted two-way personal devices would arrive in time to receive the second factor. Based on this program in “scenario planning” (which I continue today in my own entrepreneurial work, and which I teach at Columbia University and Parsons), I see three streams converging to a single point. First, we are no longer able to flourish in a complex environment without automated assistance. The revolution is already upon us. Instead of unaided and unpracticed human driving skills, computers prevent our cars from skidding on ice. Soon, autonomous vehicles will replace cab drivers. Anti-virus software scans incoming messages on our behalf, alert for malevolent Trojan horses we might otherwise click on without their intervention. IBM is fielding systems that diagnose disease or provide marketing assistance. These artificial replacements for human instinct and judgment are growing more powerful every year, and will continue to do so for decades. They should be harnessed to protect our privacy, rather than pry ever deeper into our lives. The second major trend, in volume (though perhaps not yet still in quality) the cloud now contains the majority of recorded human knowledge and behavior. Our privacy trails are increasingly diffuse; we cannot hide our precious secrets in a safe deposit box or behind a black curtain. Privacy is too important to be managed on a case-by-case basis. Any future solution must live in the cloud, and work with and across all the services we deem essential—with or without those services’ cooperation. And third, groups rather than individuals are now targeted for attack. Twenty years ago, hardly any business knew my purchase habits, and Russian gangs could not read a credit card slip tossed in a garbage pail in New Jersey—let alone correlate thousands of seemingly innocuous data points into an accurate psychological profile. But today everyone is swept up by massive phishing expeditions, and information, once released, is hard to recover. So knowledge of the entire virtual environment, well beyond that held by a single individual, is the third key to staying ahead of privacy threats. Thus I imagine the next step in privacy control will be a user agent that lives in the cloud and acts to protect your digital life and property autonomously.

The orignial GORT, a member of an interstellar police force in the 1951 science fiction film "The Day the Earth Stood Still". (Credit Peter Petrus via Flickr) Let’s call this agent “GORT”. GORT would inquire about your privacy intents, as well as actively learn through your actions and words. In the same way advertisers infer your intent from shopping patterns and social media posts, it would stand between you and the World Wild Web. Like a personal firewall, you could route texts and mail and Facebook posts through GORT, which would flag potentially risky activity. For example, “Do you really mean to send this note (which GORT judges as sarcastic and personal) to the entire sales division?” A job saved, and with growing trust, GORT is granted eventual access to all your service accounts. GORT would learn based on shared information from other people’s GORTS. When Facebook makes a privacy change, it would quickly log in on your behalf, and readjust the settings to maintain your privacy goals. GORT would read blogs proactively across the net, to be on guard for disparaging comments, or actions likely to indicate your privacy is about to be breached. Many companies offer two-factor authentication today, although adoption has been slow due to lack of visibility, perceived complexity, and inconvenience – a challenge for you, but not for GORT. GORT would implement PGP-like encryption methods to be sure your communications could only be read by the intended party, and it could verify the intended party’s identity in the first place. GORT would act as a VPN to assure anonymity when anonymity is required. And if GORT was unsure which action to take, it would reach out and ping a human—you. Of course, GORT becomes a target for scammers and will be gamed by the very service providers it is designed to manage. But once the game is afoot, all GORTs become aware of the shifting rules of engagement, and respond accordingly—faster, and more efficiently than you could ever hope to accomplish alone. Is relying on a computerized intelligence to have your best interest at heart a scary prospect? Perhaps, but we rely on computers to fly our planes, invest our pension funds and keep us entertained. We cannot maintain our own sense of identity without their help.

|

|

Contact Greg Blonder by email here - Modified Genuine Ideas, LLC. |